In late July, researchers began crowd-sourced experiments with an Internet-based tool to translate photographs taken from many angles, of any object, into digital 3D models.

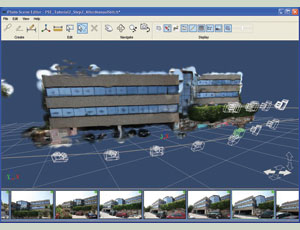

The beauty in the idea of CAD software vendor Autodesk Labs’ Photo Scene Editor for Project Photofly, is its simplicity. The Photo Scene Editor can accept and process dozens and dozens of uploaded photos and return a model in minutes.

The tool harnesses cloud computing to stitch images into a 3D matrix, using algorithms that recognize and relate pixels depicting features that are common in the images. Once in 3D, features can be scaled based on a single known dimension.

The idea is charming, but the challenge is in getting results to come out right. Some bloggers who have experimented with it complain the interface is sluggish or difficult; others report server glitches, and some say submitted projects either failed to render or came out looking like abstract art.

Those comments are consistent with ENR’s experiments, although we are reminded that output depends on input. “Garbage in, garbage out” still applies.

The process does better with photos that separate subject from background; it has trouble with large expanses of glass. Sending more photos taken from more angles generates a better 3D image. However, we still are trying to model an offset toilet hub to our satisfaction.

But these early experiments in 3D imaging will lead to a very cool technology someday, and anybody can play. See the software at http://www.labs.autodesk.com/utilities/photo_scene_editor/.

Post a comment to this article

Report Abusive Comment